AI Conversion

One of the amazing new possibilities with AI generation is the ability to take a concept and quickly iterate it into something different.

Stable Diffusion offers several very useful tools for this sort of conversion. The ones I use most are Image to Image (img2img), Inpainting, and ControlNet.

Img2img allows you to use an existing image as part of your input in order to iterate on it and produce something similar. You can do anything from lightly denoising it to creating a picture that only barely uses the same general colors. Something in between those two extremes is normally what I do.

Inpainting is really good for changing specific details. You can erase objects, or add new ones, and it can also be used just to clean up a picture. This is the tool that I use the most.

ControlNet is perhaps one of the most powerful tools of Stable Diffusion. It allows you to use a variety of methods to lock down elements of an image and force a generation to stay true to a specific idea. It can be used together with other generation tools. It’s great for artists who want, well, control over the outcome of their work, rather than completely randomized generations.

Below are some examples of how I’ve used AI to improve designs. Bear in mind that most of these did not use any one tool alone to reach the final stage but I’ve tried to sort them by the method that I used most in each case. If you are curious about my process then please click the button below.

Dad in the Car

I took this photo of my dad and traced it to give it a cartoon look. I later brought it into Stable Diffusion to give it an anime look and add my mom to the image.

Rooftop Couple

Here I threw together a very rough scene layout in MS Paint and put a stock photo on top. I recolored the clothes and hair to match what I wanted and threw it in Image to Image. As you can see, using Img2Img alone can alter the composition quite a bit.

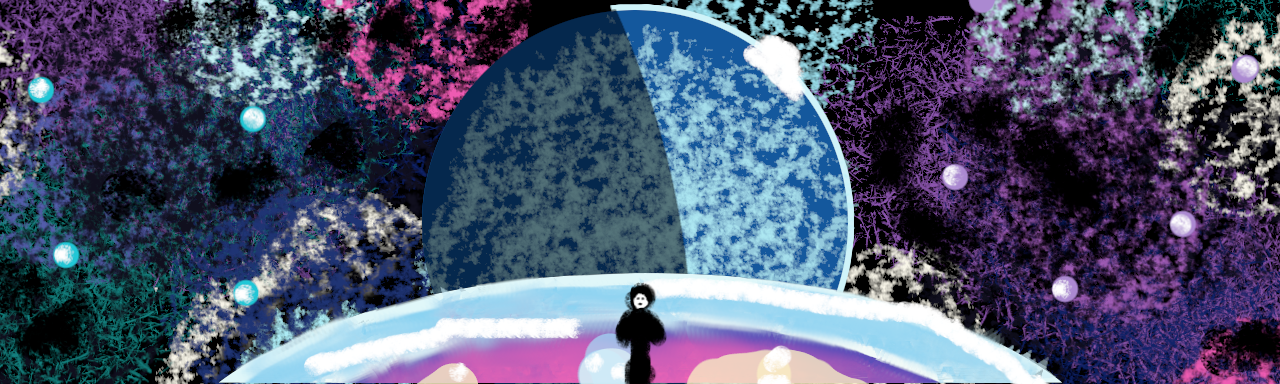

Video Game Concept art

I’ve had a game idea floating around in my mind for a long time. I decided to put Stable Diffusion to the test by blocking out very basic shapes and colors in MS Paint and bringing them to Img2Img to flesh them out.

Art Deco

This was one of my first-ever experiments with Stable Diffusion. Recently I decided to return to it and improve the design to fit my original intent. This was done primarily with inpainting but also involved some manual editing in GIMP.

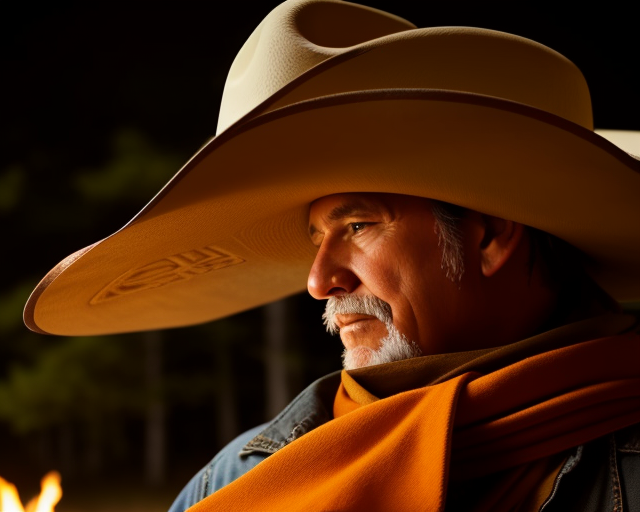

Pareidolia

The phenomenon of seeing human faces in random patterns. One of the features of ControlNet is the Scribble Model, which allows you to use a rough sketch or “scribble” to create images. I used some faces I found to demonstrate.

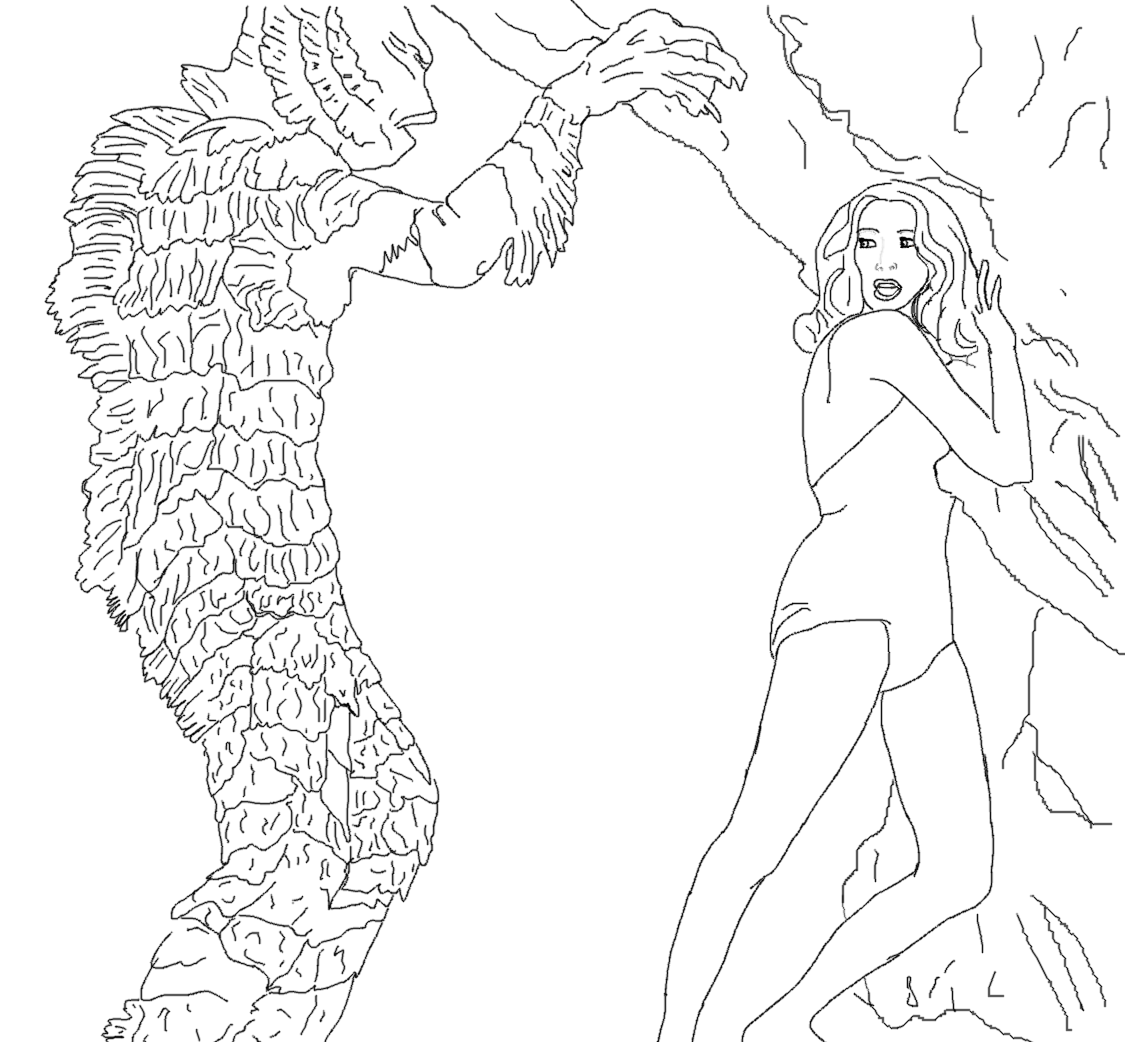

Swamp Thing

You can also use more detailed sketches if you have a better idea of what you want or if you perhaps just don’t know when to stop while doing your concept drawing. Jokes aside, the more detail you want to put in, the more control you have over the final product.

Art Swap

I was challenged to take a rough drawing from another artist and to make her art into my own. This is the result. In this case I did somewhat more than put it into ControlNet. It was an art challenge after all.

Desktop Background

I bought a very large monitor with a resolution of 5120×1440 to help me improve my workflow. I use about 6 different windows while I’m working and I needed the extra space. Unfortunately it’s hard to find cool desktop backgrounds that large. On the other hand, I’m an artist.